Abstract

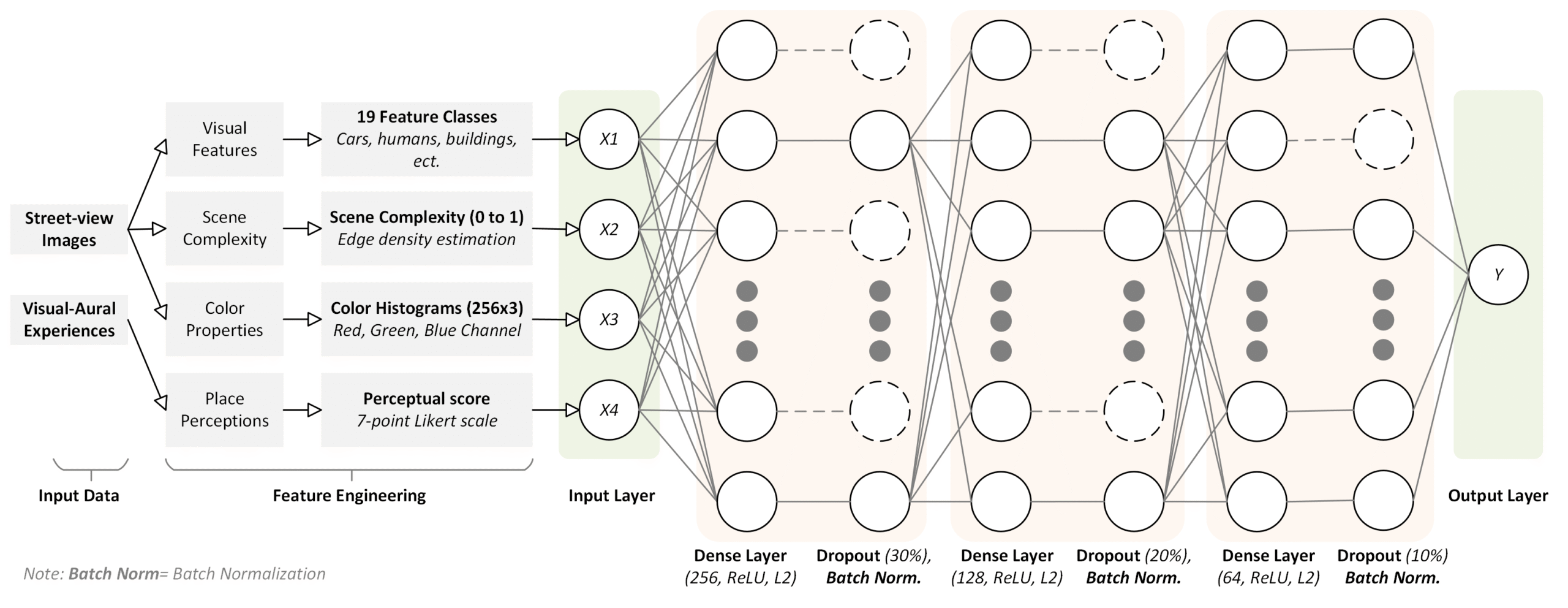

Understanding how people perceive urban environments is critical to creating sustainable, engaging, and inclusive cities, particularly in rapid, economically driven urban expansion. Although various methods of measuring Human Perception of Place (HPP) have been developed by adopting computer vision and street-view images, these approaches are solely visual-based and neglect the influence of other senses on human subjective perception, introducing visual bias. Additionally, limited generalizability of predictive models poses a challenge when applying them across diverse urban contexts. In response, this study proposes a scalable framework for capturing HPP using a transfer learning-based Feedforward Neural Network (FNN) combined with cross-modal techniques that integrate both visual and auditory data. Leveraging the Place Pulse dataset, the proposed models incorporate visual-aural experiences to mitigate visual bias and achieve improved prediction accuracy. The results indicate that the proposed approach significantly outperforms traditional tree-based and margin-based regression models, achieving an average R2 improvement of 27 % over GBRT and offering stronger alignment with public consensus. These findings also highlight how architectural diversity, active street life, and vibrant soundscapes positively influence perceptions of beauty, liveliness, and wealth. Conversely, areas with high traffic and chaotic noise are often perceived as less safe, despite their vibrancy. This research underscores the value of multisensory data in capturing the complexity of human place perception and provides practical guidance for urban planners and policymakers, supporting the design of data-driven, human-centered planning strategies that foster livability and well-being in diverse urban settings.

DOI: https://doi.org/10.1016/j.cities.2025.106286

Journal: Cities